Introduction

In the realm of telephonic customer support, challenges abound, from understanding context to troubleshooting efficiently. These hurdles often result in longer wait times and increased workload for support agents. However, the advent of Retrieval-Augmented Generation (RAG) offers a promising solution. By empowering chatbots with real-custom knowledge and contextual understanding, RAG addresses these challenges effectively. In this article, we delve into how RAG enhances chatbot capabilities within telephonic support, exploring its operational mechanism and architectural framework.

Problem Statement

Today's telephonic customer support often relies on menu-driven systems or basic chatbots. These systems can be frustrating for users who encounter limitations in:

Context Understanding: Users frequently struggle to articulate their issues clearly, leading to irrelevant prompts or dead ends in the conversation.

Accuracy: Pre-recorded responses often provide outdated or incorrect information, undermining the effectiveness of the support system.

Troubleshooting Efficiency: Basic chatbots often struggle to identify and address specific user issues, necessitating human intervention and prolonging resolution times.

These limitations result in longer wait times, user frustration, and increased workload for human support agents, highlighting the need for a more effective and efficient customer support solution

Enhancing System Performance with RAG

The implementation of Retrieval-Augmented Generation (RAG) represents a significant advancement in chatbot functionality, providing a solution that enhances the system's capabilities through real-time access to customized knowledge and contextual understanding.

Here's a breakdown of how RAG contributes to building a more effective system:

Knowledge Integration: RAG facilitates seamless integration with extensive knowledge bases such as support articles or FAQs. This integration empowers the chatbot to draw upon a wealth of information, enabling it to deliver accurate and timely solutions to user inquiries.

Improved Troubleshooting: By leveraging relevant knowledge, RAG enhances the chatbot's troubleshooting capabilities. It enables the system to recommend tailored troubleshooting steps based on the user's specific situation, thereby expediting the resolution process and reducing the need for human intervention.

Contextual Rephrasing: Through sophisticated analysis of chat history and user queries, RAG can intelligently rephrase inquiries to optimize information retrieval. This ensures that the system retrieves relevant knowledge base articles tailored to the user's specific needs, rather than providing generic responses.

Operational Mechanism of RAG

Similar to an adept support personnel within your organization, RAG employs its knowledge and available resources to effectively address your inquiries. Here's the breakdown of its operational process:

Retrieval: Research First

Initial Research comparable to your support personnel consulting internal documents, RAG begins by thoroughly searching through an extensive repository of your company's documents using a specialized database known as the Vector Database. This database facilitates RAG in identifying the most pertinent information related to your query.

Augmentation: Setting the Context

Establishing context much like your support personnel seeking clarification or reviewing past tickets, RAG utilizes the retrieved information to formulate a targeted prompt. This prompt serves as a guiding framework, aiding RAG in comprehending the context of your inquiry and preparing a well-informed response.

Generation: Delivering the Answer

Providing the Response Subsequently, RAG leverages its linguistic capabilities, akin to your support personnel's communication proficiency, to craft a coherent and insightful response based on the retrieved information and the developed prompt.

This three-step process (retrieval, augmentation, generation) allows RAG to function like a highly knowledgeable and efficient support person, tailored to meet the specific needs of your company.

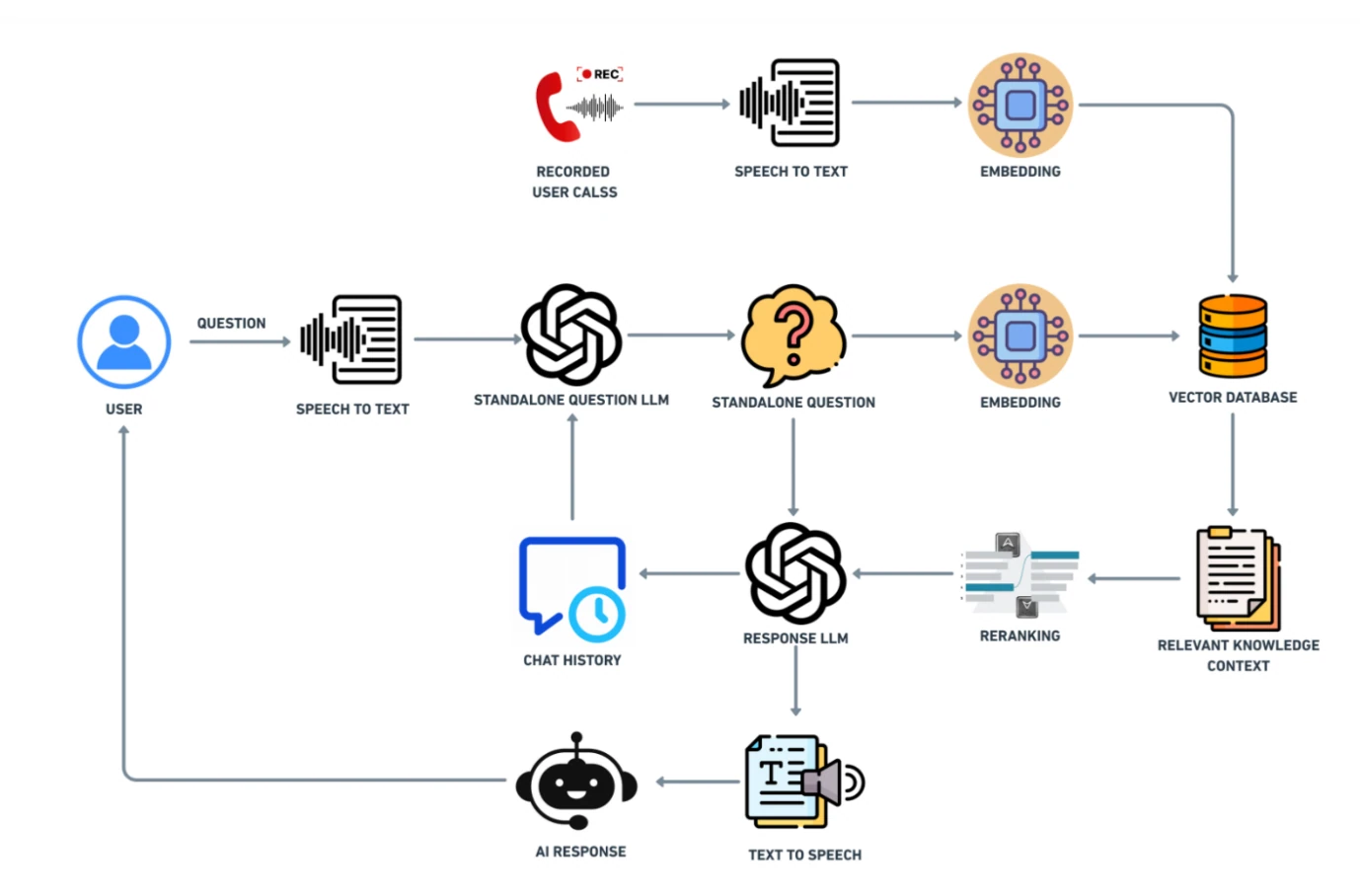

Architecture for Telephonic Customer Support

To explain a RAG architecture, Let's see how it helps on a real-world scenario involving a voice support chatbot.

Components

- User Input:

The system begins with a user initiating a support interaction and posing a question. - Context Understanding:

- Speech-to-Text (STT): Utilizing an STT model, the user's verbal inquiry is transcribed into text.

- Chat History Capture: The system archives the conversation history, possibly empty during the initial interaction.

- LLM for Contextual Rephrasing:

- Large Language Model (LLM): Employing a robust LLM such as OpenAI GPT, the system scrutinizes the chat history to refine the user's question, enhancing its specificity for knowledge base search.

- This enhanced question accuracy augments the response precision.

- Vector Search:

- Rephrased Question as Query: The rephrased query is employed to search the Vector database housing customer support documents.

- Top K Retrieval: The database retrieves the most relevant articles (Top K) related to the question associated with the query. The value of K can be customized based on the specific use case (e.g., K = 10 retrieves the top 10 documents).

- Knowledge Context Reranking:

- To ensure optimal information presentation, a reranking technique is employed.

- Expanding Retrieval Scope: The retrieved documents are rearranged, prioritizing the most relevant ones (Top K). The value of K is adaptable to the use case (e.g., K=3).

- Response Generation with Knowledge:

- Response LLM: The top-ranking articles and rephrased question are fed to another GPT model.

- Response Crafting: Leveraging knowledge from the articles, the GPT model formulates a comprehensive response addressing the user's query

- Output Generation:

- Chat History Update: The system logs both the user's inquiry and the generated response.

- Text-to-Speech (TTS): Finally, the textual response undergoes conversion into audio via TTS, enabling the user to hear the chatbot's response.

Ready to Get Started with RAG ?

Numerous open-source libraries and tools facilitate RAG implementation with OpenAI. For further exploration of this compelling technology, consider leveraging resources like the OpenAI API and libraries such as LangChain.

By harnessing the power of RAG, you can unlock the full potential of OpenAI and build AI applications distinguished by intelligence and informativeness.

Talk to us for more insights

What more? Your business success story is right next here. We're just a ping away. Let's get connected.